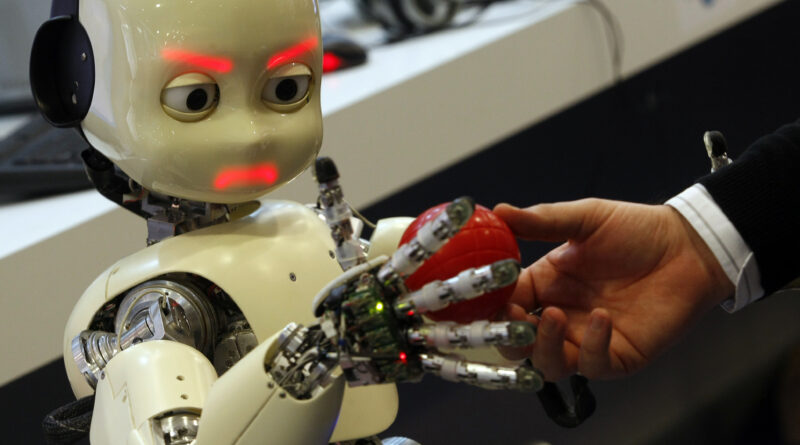

EU seeks to regulate artificial intelligence to protect fundamental rights

Artificial Intelligence (AI) applications are increasingly part of daily life. They are used to make predictions about people’s behaviour, to identify citizens for health and public services, to monitor them on various tasks, to pay and track finances.

Many of theses uses affect fundamental rights, such as the right to privacy and data protection, but also the right to free movement, of association and political participation. The European Commission has thus proposed a new regulation to establish some safeguards, depending on the level of risk of AI applications.

Under the proposal, AI systems considered a threat to the safety, livelihoods and rights of people, such as applications that manipulate human behaviour to circumvent users’ free will (e.g. toys using voice assistance encouraging dangerous behaviour of minors) or that allow ‘social scoring’ by governments, will be banned in the EU.

AI systems identified as high-risk, including those that can influence the access to education and the professional course of someone’s life (e.g. scoring of exams), those with health implications (e.g. robot-assisted surgery), those in the area of employment and workers’ management (e.g. CV-sorting for recruitment), for essential private and public services (e.g. credit scoring), for border and migration control (e.g. verification of travel documents) and any other form of remote biometric identification, will be subject to strict obligations before they can be put on the market. These obligations include, for instance, risk assessments, specific authorisations or human oversight.

AI systems identified as posing limited risk, e.g. chatbots, will be subject to transparency rules so that users know they are interacting with a machine and can make informed decisions.

AI systems with minimal risk, such as video games or spam filters, will be allowed on the European market without any specific requirement.

“On Artificial Intelligence, trust is a must, not a nice to have. With these landmark rules, the EU is spearheading the development of new global norms to make sure AI can be trusted,” said Executive Vice-President of the European Commission Margrethe Vestager presenting the propsal.

Ms Vestager added that new rules will intervene “where strictly needed: when the safety and fundamental rights of EU citizens are at stake.”

European Digital Rights (EDRi), a coalition on NGOs, said “the proposal has taken a significant step to protect people in Europe from creepy facial recognition by banning law enforcement from using real time remote biometric identification in specific ways, requiring specific national laws and case-by-case authorisation to allow such practices”.

However, they added, many biometric mass surveillance practices by local governments or corporations remain permitted.

“The draft law does not prohibit the full extent of unacceptable uses of AI and in particular all forms of biometric mass surveillance,” said Sarah Chander, Senior Policy Lead on AI at EDRi.

European consumer organisation BEUC also noted the proposal does not adequately protect consumers, for example from possible economic harm caused by AI products and services. BEUC called on the European Parliament and EU governments, which will have to approve the regulation, to improve the safeguards.

Claudia Delpero © all rights reserved

Photo by Ferenc Isza © European Commission

Europe Street News is an online magazine covering citizens’ rights in the EU and the UK. We are fully independent and we are committed to providing factual, accurate and reliable information. We believe citizens’ rights are at the core of democracy and information about these topics should be accessible to all. This is why our website and newsletter are available for free. A lot of research is behind every article, so if you found this useful, please consider making a contribution so we can continue and expand our coverage. Thank you!